Wave field synthesis: Difference between revisions

(New site currently widely alike the wikipedia site. My own Text, more extend version scheduled for hydrogenaudio in future.) |

m (Picture size) |

||

| Line 1: | Line 1: | ||

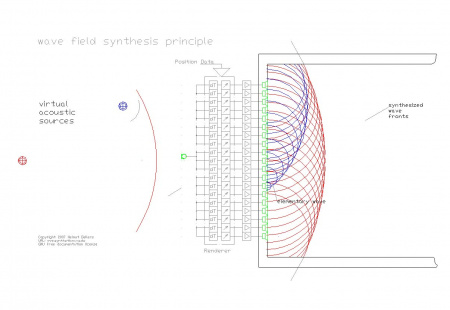

[[image:Principle_wfs.jpg|thumb|right| | [[image:Principle_wfs.jpg|thumb|right|450px|WFS Principle, see as 60 sec. animation in demo below]] | ||

''''' | ''''' | ||

== '''Wave field synthesis''' == | == '''Wave field synthesis''' == | ||

| Line 37: | Line 37: | ||

*[http://www.syntheticwave.de/Principle%20of%20wave%20field%20synthesis.htm DEMO Wave field synthesis ( Animation ) ] | *[http://www.syntheticwave.de/Principle%20of%20wave%20field%20synthesis.htm DEMO Wave field synthesis ( Animation ) ] | ||

[[Category:Technical]] | |||

Latest revision as of 13:33, 26 December 2007

Wave field synthesis

[or WFS) is a spatial sound reproduction principle. It is physically based, not reliant upon psychoacoustic effects like previously known sound rendition procedures.

Berkhout invented the procedure in 1988 at the University of Delft, based on the Huygens principle. The mathematical base is the Kirchhoff- Helmholtz- Integral which describes, that if sound pressure and velocity regarding the limits of a source free volume are known, in any space point inside this volume pressure and velocities determinate.

This idea can be described thusly: The wave front of a natural acoustic source according to the Huygens principle is built up from several elementary waves. A computer synthesis moves each of the single loudspeakers, arranged in rows around the listener, just in that moment, to which the wave front of the virtual source would go through its space point. Thus, according to the Huygens principle, the primary wavefront is physically restored.

Their starting point portrays a virtual acoustic source, which hardly differs from a material acoustic source here. It does not appear to move if we move ourselves in the listening area, like the phantom acoustic source between conventional loudspeakers does. Just as a material acoustic source, we always locate it at its' virtual starting point. This starting point can exist before or behind the loudspeaker row. Convex or concave wave fronts may be produced. Because of the loudspeaker lines arranged around the listener, a virtual acoustic source may be represented at any point within the infinite level of the listener. By means of the delay and level information stored in the impulse response of the recording room, or according to the model based mirror source approach,it is also possible to restore the acoustic behaviour of the recording room.

At the German “Fraunhofer Institut” the transmission standard MPEG-4 was developed for separate transmissions of content (the audio signal) and form (the acoustic data). Caruso clearly was a mono sound source. Therefore is sufficient the transfer of the assigned pure audio information in a single mono channel. The spatial sound field becomes created in the Milan Scala alone by the fact that a large number of spatial dispersed mirror sound sources occur. According her room positions appear signal delays by the listener and the surface reflection factors influencing the frequency response and level of these mirror sound sources. Though the signal itself always Caruso's mono source remain. The conventional methods are now seeking to reduce the spatial distribution of those sound sources upon few audio channels. This always caused a loss of spatial information. Much more effective is the synthesis by the rendition side, by which all sound sources in their correct position can be mapped in principle. Each primary source needs a separate chanal.

The result of such a reproduction widely approximates a material presentation. Currently the most significant difference is the reduction of the horizontal plane of the listener. Because of the otherwise almost perfect rendition with the test setups, it seems to become more audible than using conventional loudspeaker arrangements. A fundamental problem is, that the acoustics of the rendition area has to be diminished because the speaker rows generate the acoustics of the recording area including early strong reflections and reverberation. In consequence, the rendition room reflexions are disturbing signals.

Another, “sound field transformation” approach, purposely includes those reflections into the synthesis. It seems to be comprehensive, but hardly realizable till this day. However, the prospects for wave field synthesis are breathtaking. In the future it seems to become possible to restore the entire sound field of an opera house physically into the living room.

The primary problem until now is the high effort. On one hand the single speakers must be aligned very closely; otherwise, spatial aliasing effects arise audibly. On the other hand, the speaker column or field expansion determines low frequency limit and depiction range. However, the close speaker arrangement cannot been given up - what causes their high number is that each one needs its own signal. That justifies the reduction on the lines currently, although the principle itself is not fundamentally reduced onto the plane.

Furthermore, specially produced audio material is hardly available at the moment. Nevertheless the reproduction of conventional material already may be clearly improved. By means of wave field synthesis it is capable to generate “virtual panning spots”. Such virtual sources headed on the related channel content can be placed far beyond the listening room walls. Thereby the influence of listener motion inside the rendition area drops because its influence regarding the level and angle diminishes. To solve the "sweet spot" problem in this manner is a main facility of the wave field synthesis.

References

- Berkhout, A.J.: A Holographic Approach to Acoustic Control, J.Audio Eng.Soc., vol. 36, Dezember 1988, pp. 977-995

- Berkhout, A.J.; De Vries, D.; Vogel, P.: Acoustic Control by Wave Field Synthesis, J.Acoust.Soc.Am., vol. 93, Mai 1993, pp. 2764-2778